Stack Overflow: The Hardware - 2016 Edition

This is #2 in a very long series of posts on Stack Overflow’s architecture.

Previous post (#1): Stack Overflow: The Architecture - 2016 Edition

Next post (#3): Stack Overflow: How We Do Deployment - 2016 Edition

Who loves hardware? Well, I do and this is my blog so I win. If you don’t love hardware then I’d go ahead and close the browser.

Still here? Awesome. Or your browser is crazy slow, in which case you should think about some new hardware.

I’ve repeated many, many times: performance is a feature. Since your code is only as fast as the hardware it runs on, the hardware definitely matters. Just like any other platform, Stack Overflow’s architecture comes in layers. Hardware is the foundation layer for us, and having it in-house affords us many luxuries not available in other scenarios…like running on someone else’s servers. It also comes with direct and indirect costs. But that’s not the point of this post, that comparison will come later. For now, I want to provide a detailed inventory of our infrastructure for reference and comparison purposes. And pictures of servers. Sometimes naked servers. This web page could have loaded much faster, but I couldn’t help myself.

In many posts through this series I will give a lot of numbers and specs. When I say “our SQL server utilization is almost always at 5–10% CPU,” well, that’s great. But, 5–10% of what? That’s when we need a point of reference. This hardware list is meant to both answer those questions and serve as a source for comparison when looking at other platforms and what utilization may look like there, how much capacity to compare to, etc.

How We Do Hardware

Disclaimer: I don’t do this alone. George Beech (@GABeech) is my main partner in crime when speccing hardware here at Stack. We carefully spec out each server for its intended purpose. What we don’t do is order in bulk and assign tasks later. We’re not alone in this process though; you have to know what’s going to run on the hardware to spec it optimally. We’ll work with the developer(s) and/or other site reliability engineers to best accommodate what is intended live on the box.

We’re also looking at what’s best in the system. Each server is not an island. How it fits into the overall architecture is definitely a consideration. What services can share this platform? This data store? This log system? There is inherent value in managing fewer things, or at least fewer variations of anything.

When we spec out our hardware, we look at a myriad of requirements that help determine what to order. I’ve never really written this mental checklist down, so let’s give it a shot:

- Is this a scale up or scale out problem? (Are we buying one bigger machine or a few smaller ones?)

- How much redundancy do we need/want? (How much headroom and failover capability?)

- Storage:

- Will this server/application touch disk? (Do we need anything besides the spinny OS drives?)

- If so, how much? (How much bandwidth? How many small files? Does it need SSDs?)

- If SSDs, what’s the write load? (Are we talking Intel S3500/3700s? P360x? P3700s?)

- How much SSD capacity do we need? (And should it be a 2-tier solution with HDDs as well?)

- Is this data totally transient? (Are SSDs without capacitors, which are far cheaper, a better fit?)

- Will the storage needs likely expand? (Do we get a 1U/10-bay server or a 2U/26-bay server?)

- Is this a data warehouse type scenario? (Are we looking at 3.5” drives? If so, in a 12 or 16 drives per 2U chassis?)

- Is the storage trade-off for the 3.5” backplane worth the 120W TDP limit on processing?

- Do we need to expose the disks directly? (Does the controller need to support pass-through?)

- Will this server/application touch disk? (Do we need anything besides the spinny OS drives?)

- Memory:

- How much memory does it need? (What must we buy?)

- How much memory could it use? (What’s reasonable to buy?)

- Do we think it will need more memory later? (What memory channel configuration should we go with?)

- Is this a memory-access-heavy application? (Do we want to max out the clock speed?)

- Is it highly parallel access? (Do we want to spread the same space across more DIMMs?)

- CPU:

- What kind of processing are we looking at? (Do we need base CPUs or power?)

- Is it heavily parallel? (Do we want fewer, faster cores? Or, does it call for more, slower cores?)

- In what ways? Will there be heavy L2/L3 cache contention? (Do we need a huge L3 cache for performance?)

- Is it mostly single core performance? (Do we want maximum clock?)

- If so, how many processes at once? (Which turbo spread do we want here?)

- Network:

- Do we need additional 10Gb network connectivity? (Is this a “through” machine, such as a load balancer?)

- How much balance do we need on Tx/Rx buffers? (What CPU core count balances best?)

- Redundancy:

- Do we need servers in the DR data center as well?

- Do we need the same number, or is less redundancy acceptable?

- Do we need servers in the DR data center as well?

- Do we need a power cord? No. No we don’t.

Now, let’s see what hardware in our New York QTS data center serves the sites. Secretly, it’s really New Jersey, but let’s just keep that between us. Why do we say it’s the NY data center? Because we don’t want to rename all those NY- servers. I’ll note in the list below when and how Denver differs slightly in specs or redundancy levels.

Hide Pictures (in case you’re using this as a hardware reference list later)

Servers Running Stack Overflow & Stack Exchange Sites

A few global truths so I need not repeat them in each server spec below:

- OS drives are not included unless they’re special. Most servers use a pair of 250 or 500GB SATA HDDs for the OS partition, always in a RAID 1. Boot time is not a concern we have and even if it were, the vast majority of our boot time on any physical server isn’t dependent on drive speed (for example, checking 768GB of memory).

- All servers are connected by 2 or more 10Gb network links in active/active LACP.

- All servers run on 208V single phase power (via 2 PSUs feeding from 2 PDUs backed by 2 sources).

- All servers in New York have cable arms, all servers in Denver do not (local engineer’s preference).

- All servers have both an iDRAC connection (via the management network) and a KVM connection.

Network

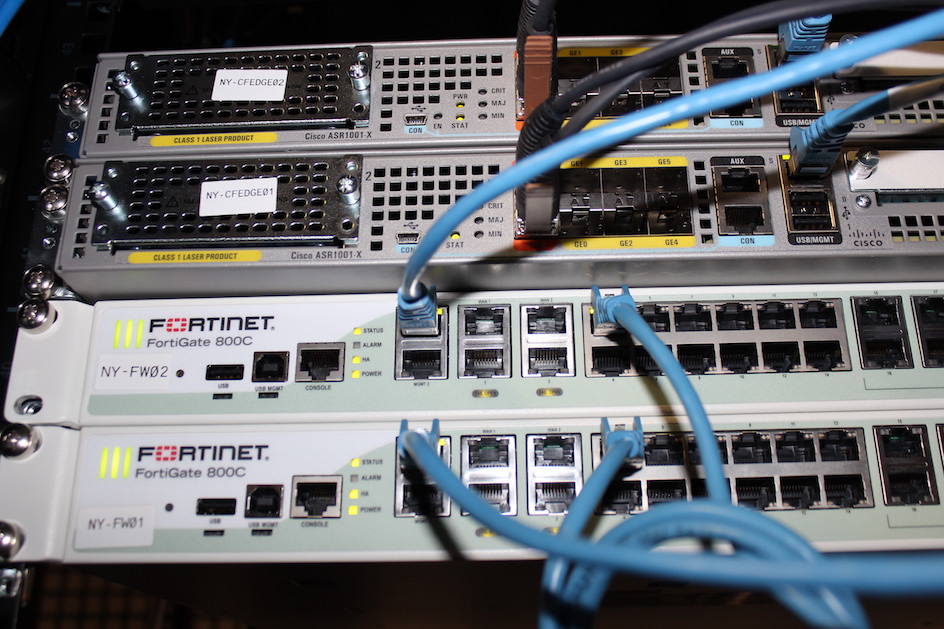

- 2x Cisco Nexus 5596UP core switches (96 SFP+ ports each at 10 Gbps)

- 10x Cisco Nexus 2232TM Fabric Extenders (2 per rack - each has 32 BASE-T ports each at 10Gbps + 8 SFP+ 10Gbps uplinks)

- 2x Fortinet 800C Firewalls

- 2x Cisco ASR-1001 Routers

- 2x Cisco ASR-1001-x Routers

- 6x Cisco 2960S-48TS-L Management network switches (1 Per Rack - 48 1Gbps ports + 4 SFP 1Gbps)

- 1x Dell DMPU4032 KVM

- 7x Dell DAV2216 KVM Aggregators (1–2 per rack - each uplinks to the DPMU4032)

Note: Each FEX has 80 Gbps of uplink bandwidth to its core, and the cores have a 160 Gbps port channel between them. Due to being a more recent install, the hardware in our Denver data center is slightly newer. All 4 routers are ASR-1001-x models and the 2 cores are Cisco Nexus 56128P, which have 96 SFP+ 10Gbps ports and 8 QSFP+ 40Gbps ports each. This saves 10Gbps ports for future expansion since we can bond the cores with 4x 40Gbps links, instead of eating 16x 10Gbps ports as we do in New York.

Here’s what the network gear looks like in New York:

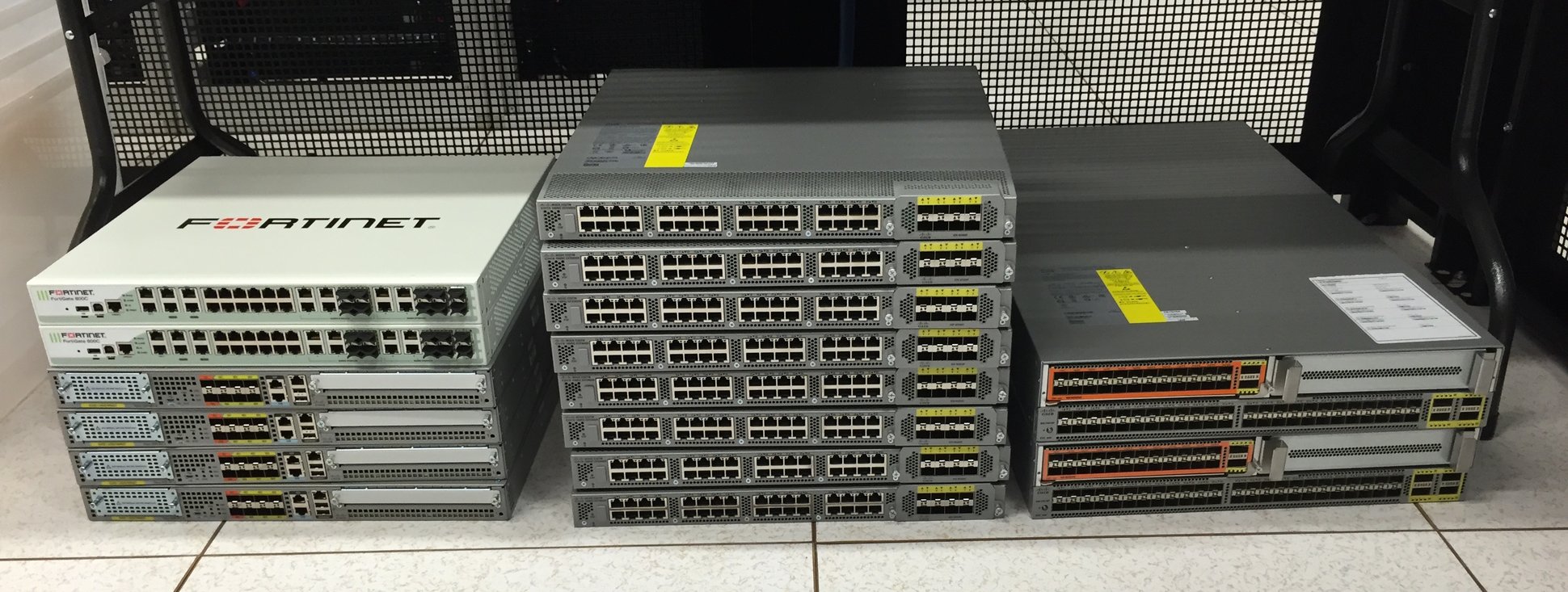

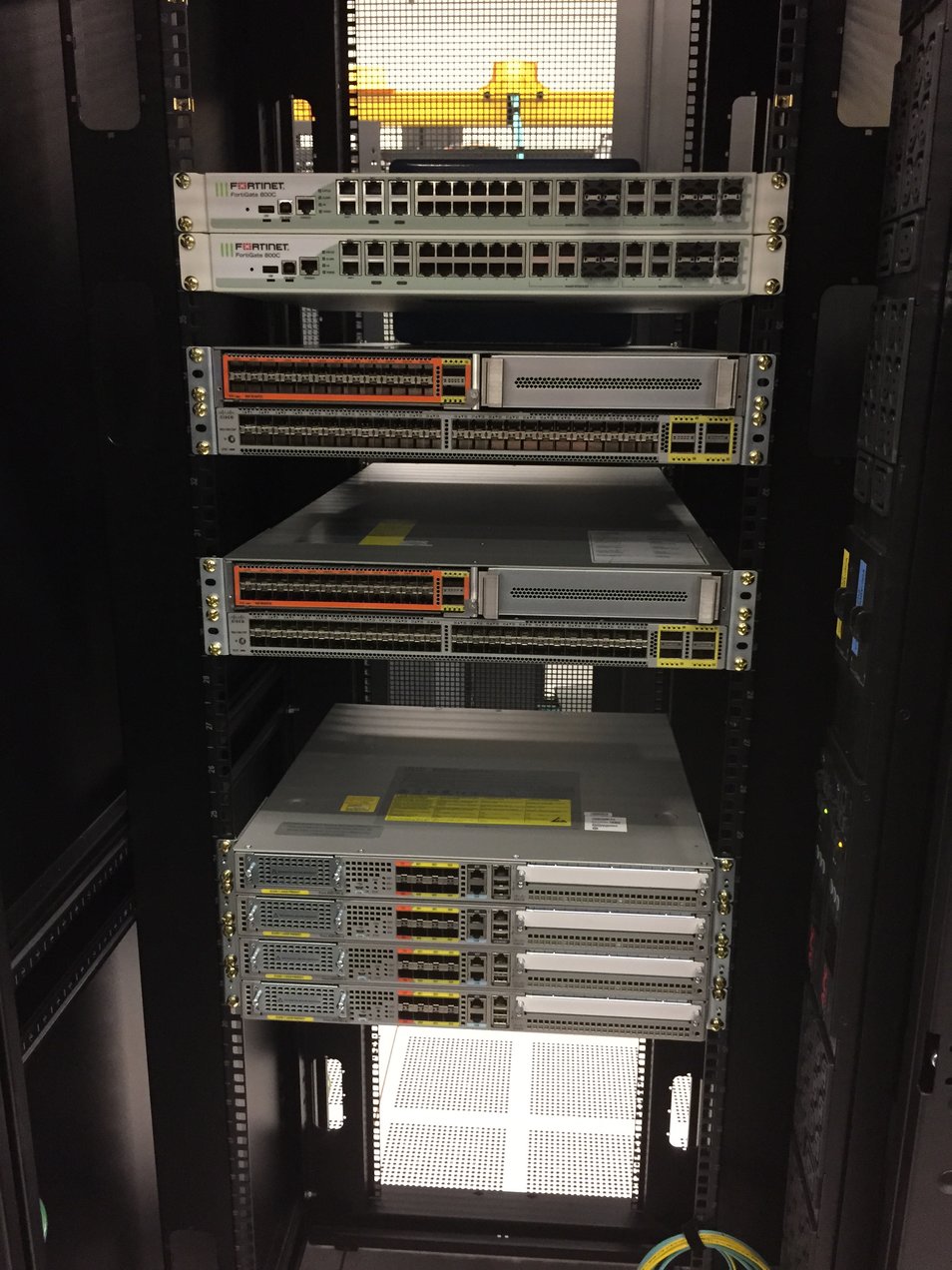

…and in Denver:

Give a shout to Mark Henderson, one of our Site Reliability Engineers who made a special trip to the New York DC to get me some high-res, current photos for this post.

SQL Servers (Stack Overflow Cluster)

- 2 Dell R720xd Servers, each with:

- Dual E5-2697v2 Processors (12 cores @2.7–3.5GHz each)

- 384 GB of RAM (24x 16 GB DIMMs)

- 1x Intel P3608 4 TB NVMe PCIe SSD (RAID 0, 2 controllers per card)

- 24x Intel 710 200 GB SATA SSDs (RAID 10)

- Dual 10 Gbps network (Intel X540/I350 NDC)

SQL Servers (Stack Exchange “…and everything else” Cluster)

- 2 Dell R730xd Servers, each with:

- Dual E5-2667v3 Processors (8 cores @3.2–3.6GHz each)

- 768 GB of RAM (24x 32 GB DIMMs)

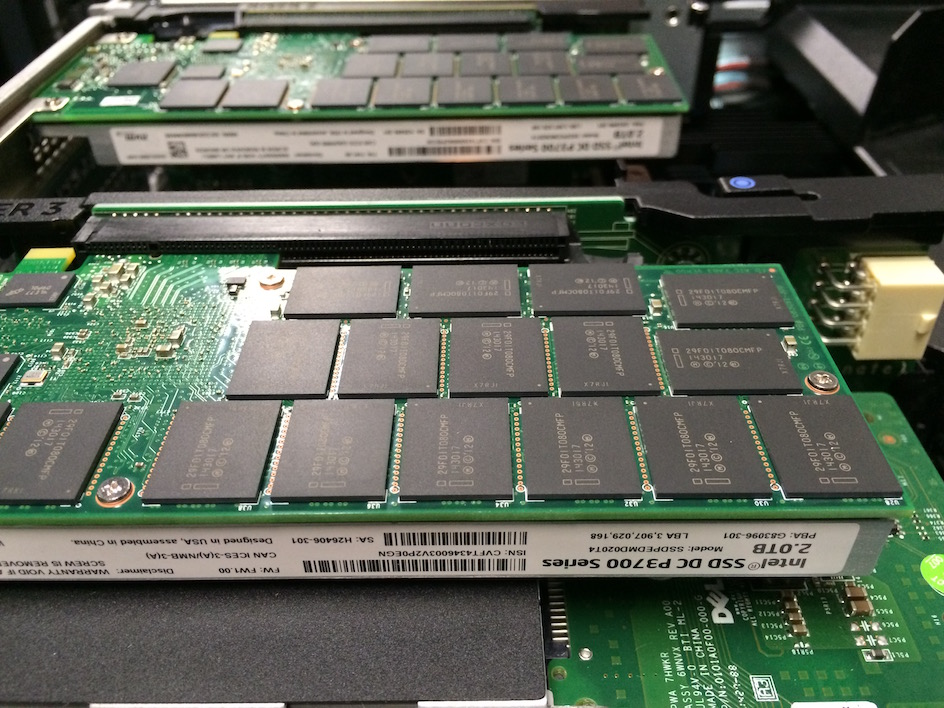

- 3x Intel P3700 2 TB NVMe PCIe SSD (RAID 0)

- 24x 10K Spinny 1.2 TB SATA HDDs (RAID 10)

- Dual 10 Gbps network (Intel X540/I350 NDC)

Note: Denver SQL hardware is identical in spec, but there is only 1 SQL server for each corresponding pair in New York.

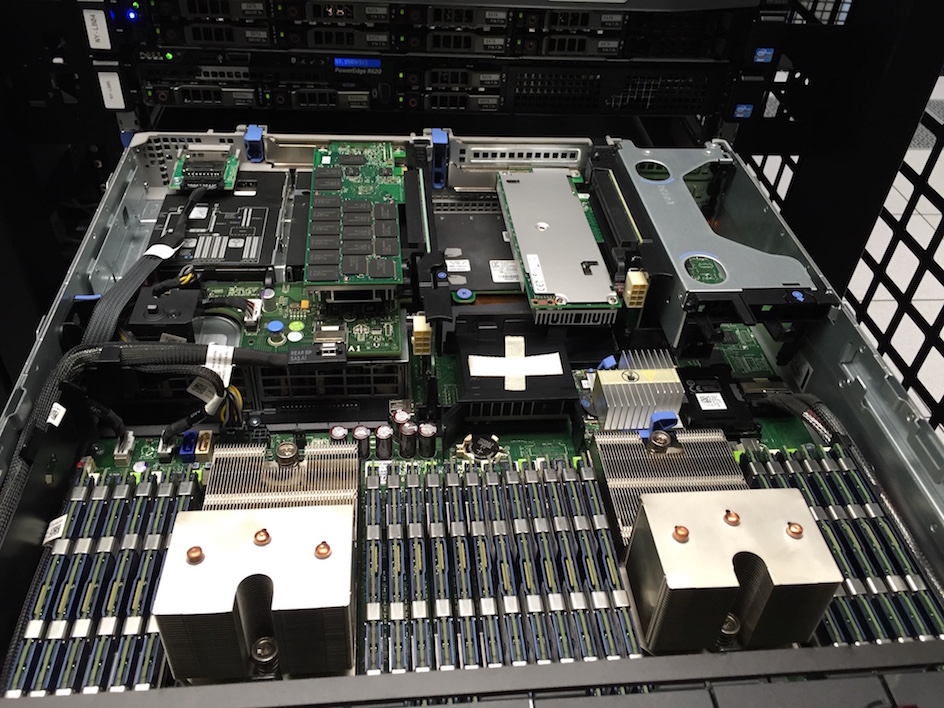

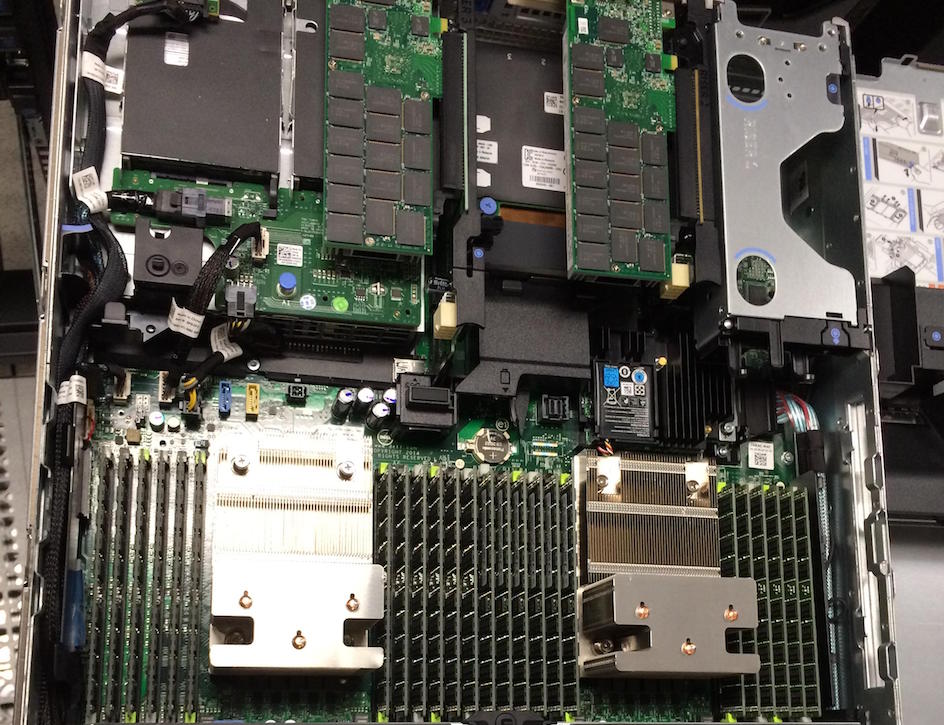

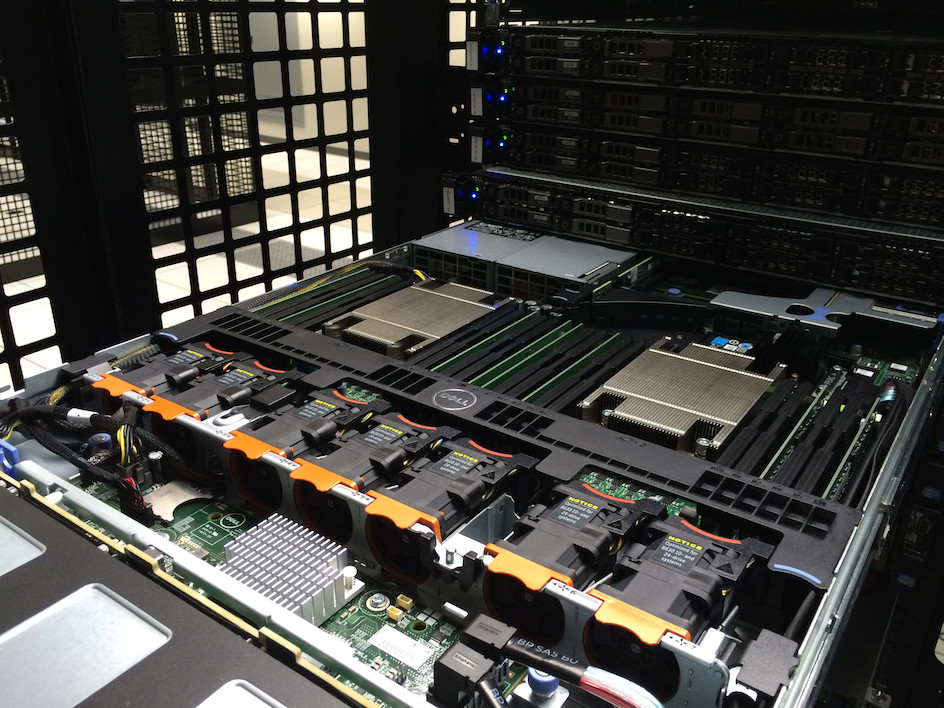

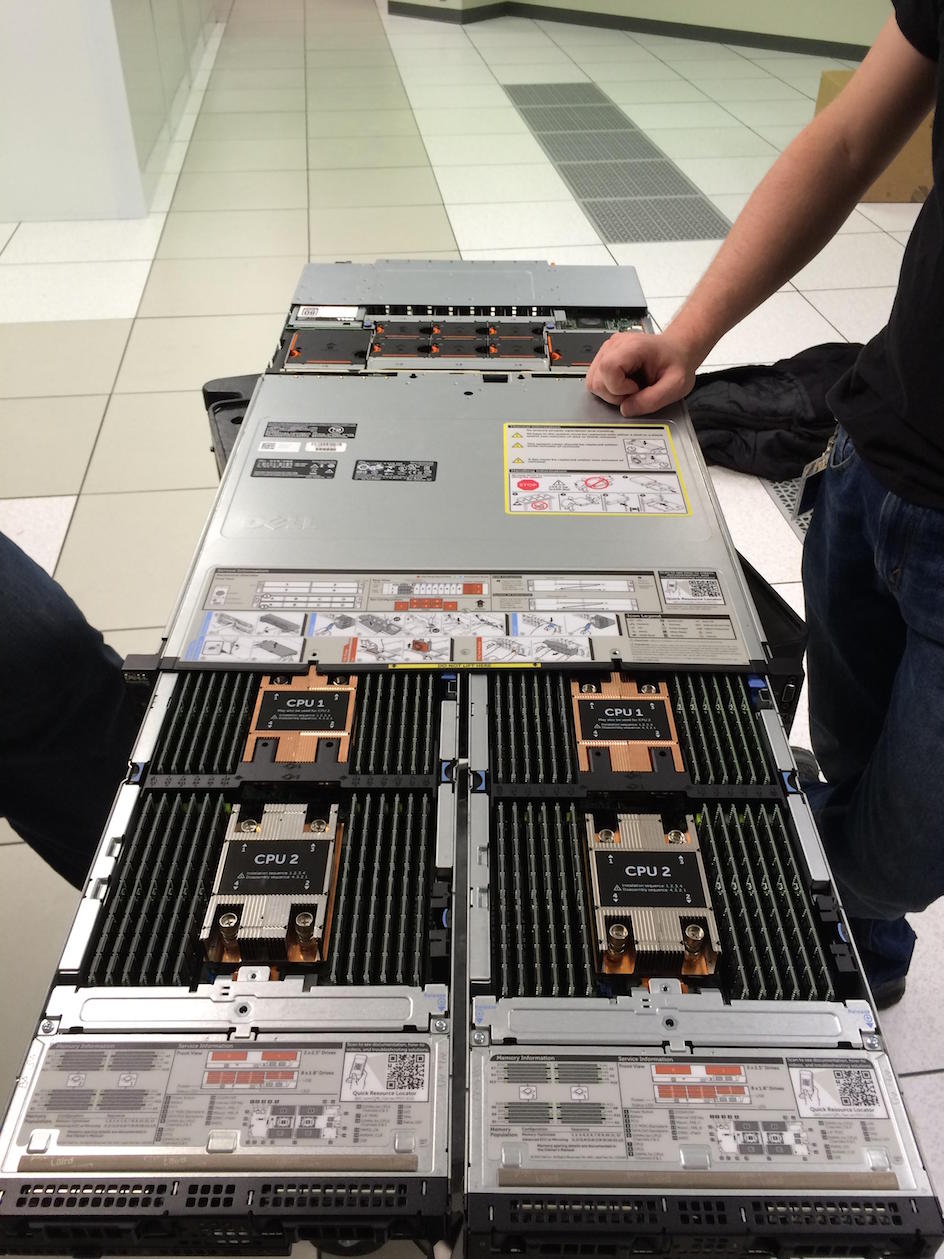

Here’s what the SQL Servers in New York looked like while getting their PCIe SSD upgrades in February:

Web Servers

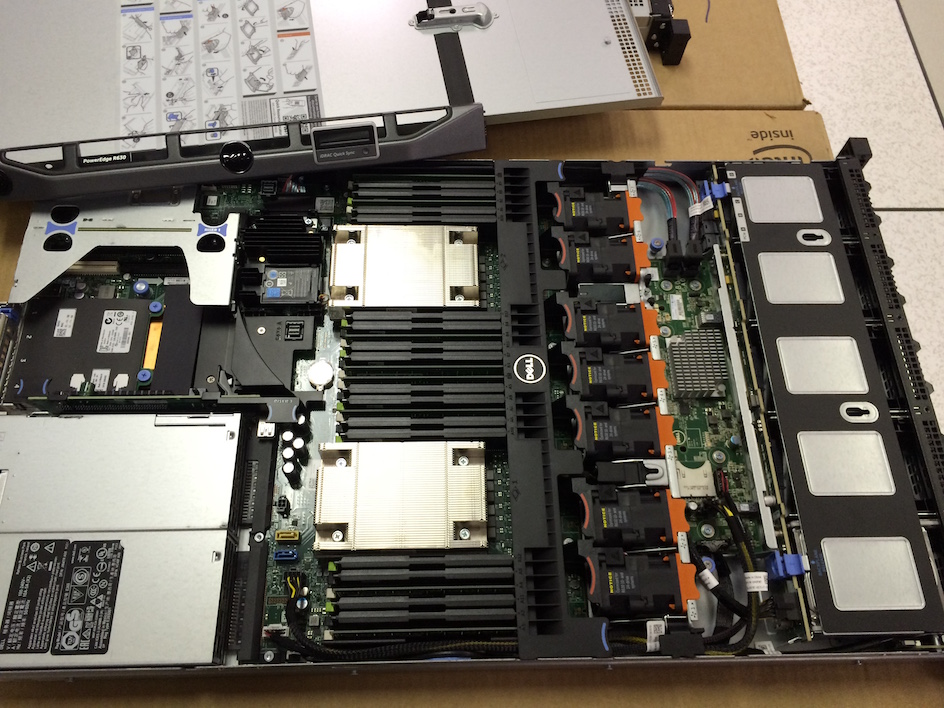

- 11 Dell R630 Servers, each with:

- Dual E5-2690v3 Processors (12 cores @2.6–3.5GHz each)

- 64 GB of RAM (8x 8 GB DIMMs)

- 2x Intel 320 300GB SATA SSDs (RAID 1)

- Dual 10 Gbps network (Intel X540/I350 NDC)

Service Servers (Workers)

- 2 Dell R630 Servers, each with:

- Dual E5-2643 v3 Processors (6 cores @3.4–3.7GHz each)

- 64 GB of RAM (8x 8 GB DIMMs)

- 1 Dell R620 Server, with:

- Dual E5-2667 Processors (6 cores @2.9–3.5GHz each)

- 32 GB of RAM (8x 4 GB DIMMs)

- 2x Intel 320 300GB SATA SSDs (RAID 1)

- Dual 10 Gbps network (Intel X540/I350 NDC)

Note: NY-SERVICE03 is still an R620, due to not being old enough for replacement at the same time. It will be upgraded later this year.

Redis Servers (Cache)

- 2 Dell R630 Servers, each with:

- Dual E5-2687W v3 Processors (10 cores @3.1–3.5GHz each)

- 256 GB of RAM (16x 16 GB DIMMs)

- 2x Intel 520 240GB SATA SSDs (RAID 1)

- Dual 10 Gbps network (Intel X540/I350 NDC)

Elasticsearch Servers (Search)

- 3 Dell R620 Servers, each with:

- Dual E5-2680 Processors (8 cores @2.7–3.5GHz each)

- 192 GB of RAM (12x 16 GB DIMMs)

- 2x Intel S3500 800GB SATA SSDs (RAID 1)

- Dual 10 Gbps network (Intel X540/I350 NDC)

HAProxy Servers (Load Balancers)

- 2 Dell R620 Servers (CloudFlare Traffic), each with:

- Dual E5-2637 v2 Processors (4 cores @3.5–3.8GHz each)

- 192 GB of RAM (12x 16 GB DIMMs)

- 6x Seagate Constellation 7200RPM 1TB SATA HDDs (RAID 10) (Logs)

- Dual 10 Gbps network (Intel X540/I350 NDC) - Internal (DMZ) Traffic

- Dual 10 Gbps network (Intel X540) - External Traffic

- 2 Dell R620 Servers (Direct Traffic), each with:

- Dual E5-2650 Processors (8 cores @2.0–2.8GHz each)

- 64 GB of RAM (4x 16 GB DIMMs)

- 2x Seagate Constellation 7200RPM 1TB SATA HDDs (RAID 10) (Logs)

- Dual 10 Gbps network (Intel X540/I350 NDC) - Internal (DMZ) Traffic

- Dual 10 Gbps network (Intel X540) - External Traffic

Note: These servers were ordered at different times and as a result, differ in spec. Also, the two CloudFlare load balancers have more memory for a memcached install (which we no longer run today) for CloudFlare’s Railgun.

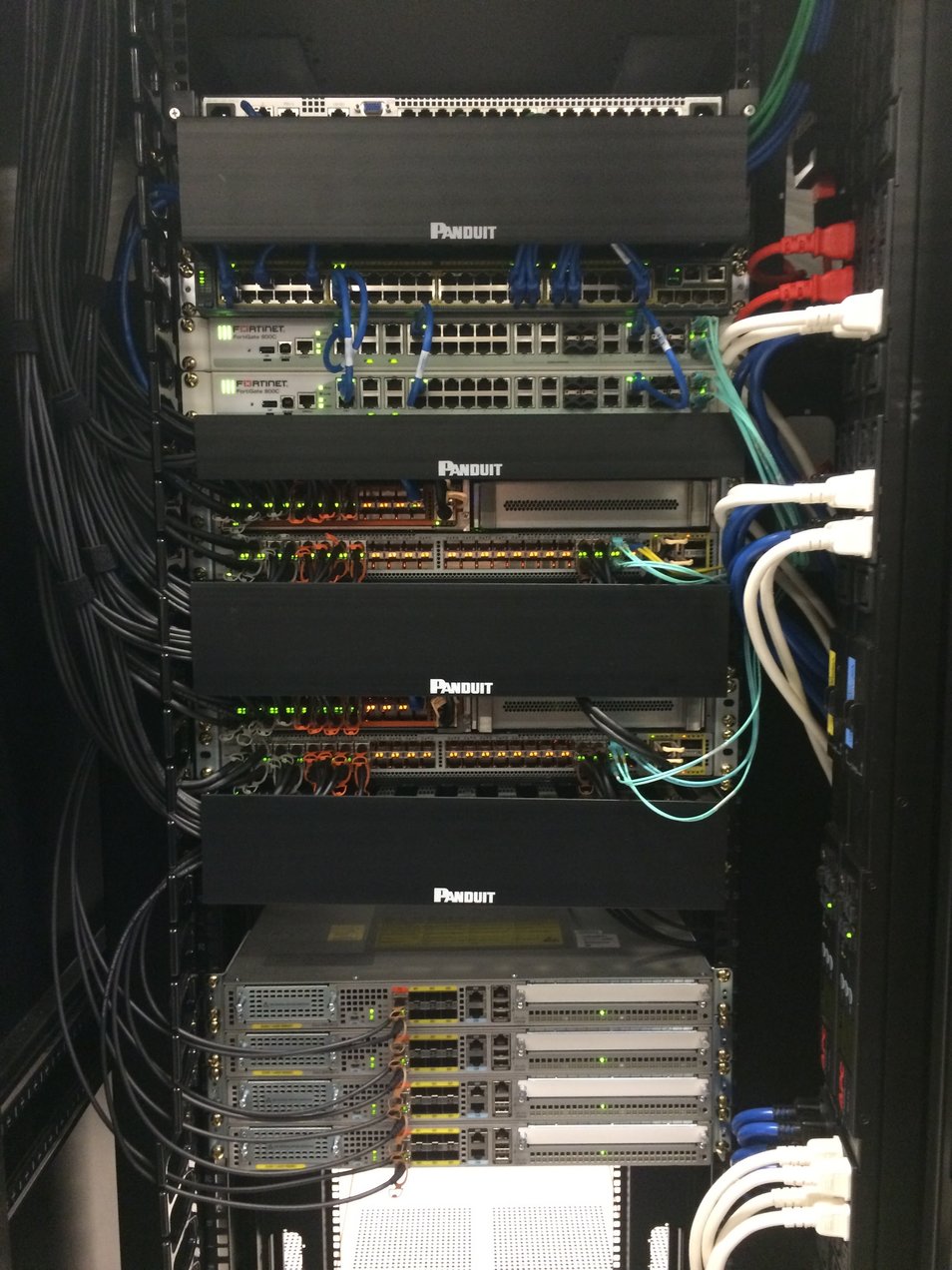

The service, redis, search, and load balancer boxes above are all 1U servers in a stack. Here’s what that stack looks like in New York:

Servers for Other Bits

We have other servers not directly or indirectly involved in serving site traffic. These are either only tangentially related (e.g., domain controllers which are seldom used for application pool authentication and run as VMs) or are for nonessential purposes like monitoring, log storage, backups, etc.

Since this post is meant to be an appendix for many future posts in the series, I’m including all of the interesting “background” servers as well. This also lets me share more server porn with you, and who doesn’t love that?

VM Servers (VMWare, Currently)

- 2 Dell FX2s Blade Chassis, each with 2 of 4 blades populated

- 4 Dell FC630 Blade Servers (2 per chassis), each with:

- Dual E5-2698 v3 Processors (16 cores @2.3–3.6GHz each)

- 768 GB of RAM (24x 32 GB DIMMs)

- 2x 16GB SD Cards (Hypervisor - no local storage)

- Dual 4x 10 Gbps network (FX IOAs - BASET)

- 4 Dell FC630 Blade Servers (2 per chassis), each with:

- 1 EqualLogic PS6210X iSCSI SAN

- 24x Dell 10K RPM 1.2TB SAS HDDs (RAID10)

- Dual 10Gb network (10-BASET)

- 1 EqualLogic PS6110X iSCSI SAN

- 24x Dell 10K RPM 900GB SAS HDDs (RAID10)

- Dual 10Gb network (SFP+)

There a few more noteworthy servers behind the scenes that aren’t VMs. These perform background tasks, help us troubleshoot with logging, store tons of data, etc.

Machine Learning Servers (Providence)

These servers are idle about 99% of the time, but do heavy lifting for a nightly processing job: refreshing Providence. They also serve as an inside-the-datacenter place to test new algorithms on large datasets.

- 2 Dell R620 Servers, each with:

- Dual E5-2697 v2 Processors (12 cores @2.7–3.5GHz each)

- 384 GB of RAM (24x 16 GB DIMMs)

- 4x Intel 530 480GB SATA SSDs (RAID 10)

- Dual 10 Gbps network (Intel X540/I350 NDC)

Machine Learning Redis Servers (Still Providence)

This is the redis data store for Providence. The usual setup is one master, one slave, and one instance used for testing the latest version of our ML algorithms. While not used to serve the Q&A sites, this data is used when serving job matches on Careers as well as the sidebar job listings.

- 3 Dell R720xd Servers, each with:

- Dual E5-2650 v2 Processors (8 cores @2.6–3.4GHz each)

- 384 GB of RAM (24x 16 GB DIMMs)

- 4x Samsung 840 Pro 480 GB SATA SSDs (RAID 10)

- Dual 10 Gbps network (Intel X540/I350 NDC)

Logstash Servers (For ya know…logs)

Our Logstash cluster (using Elasticsearch for storage) stores logs from, well, everything. We plan to replicate HTTP logs in here but are hitting performance issues. However, we do aggregate all network device logs, syslogs, and Windows and Linux system logs here so we can get a network overview or search for issues very quickly. This is also used as a data source in Bosun to get additional information when alerts fire. The total cluster’s raw storage is 6x12x4 = 288 TB.

- 6 Dell R720xd Servers, each with:

- Dual E5-2660 v2 Processors (10 cores @2.2–3.0GHz each)

- 192 GB of RAM (12x 16 GB DIMMs)

- 12x 7200 RPM Spinny 4 TB SATA HDDs (RAID 0 x3 - 4 drives per)

- Dual 10 Gbps network (Intel X540/I350 NDC)

HTTP Logging SQL Server

This is where we log every single HTTP hit to our load balancers (sent from HAProxy via syslog) to a SQL database. We only record a few top level bits like URL, Query, UserAgent, timings for SQL, Redis, etc. in here – so it all goes into a Clustered Columnstore Index per day. We use this for troubleshooting user issues, detecting botnets, etc.

- 1 Dell R730xd Server with:

- Dual E5-2660 v3 Processors (10 cores @2.6–3.3GHz each)

- 256 GB of RAM (16x 16 GB DIMMs)

- 2x Intel P3600 2 TB NVMe PCIe SSD (RAID 0)

- 16x Seagate ST6000NM0024 7200RPM Spinny 6 TB SATA HDDs (RAID 10)

- Dual 10 Gbps network (Intel X540/I350 NDC)

Development SQL Server

We like for dev to simulate production as much as possible, so SQL matches as well…or at least it used to. We’ve upgraded production processors since this purchase. We’ll be refreshing this box with a 2U solution at the same time as we upgrade the Stack Overflow cluster later this year.

- 1 Dell R620 Server with:

- Dual E5-2620 Processors (6 cores @2.0–2.5GHz each)

- 384 GB of RAM (24x 16 GB DIMMs)

- 8x Intel S3700 800 GB SATA SSDs (RAID 10)

- Dual 10 Gbps network (Intel X540/I350 NDC)

That’s it for the hardware actually serving the sites or that’s generally interesting. We, of course, have other servers for the background tasks such as logging, monitoring, backups, etc. If you’re especially curious about specs of any other systems, just ask in comments and I’m happy to detail them out. Here’s what the full setup looks like in New York as of a few weeks ago:

What’s next? The way this series works is I blog in order of what the community wants to know about most. Going by the Trello board, it looks like Deployment is the next most interesting topic. So next time expect to learn how code goes from a developers machine to production and everything involved along the way. I’ll cover database migrations, rolling builds, CI infrastructure, how our dev environment is set up, and share stats on all things deployment.